If you’ve noticed that ChatGPT 5 or 5.1 seems to be struggling lately, you’re not alone. Our team relies heavily on AI tools for research, troubleshooting, and content development. After weeks of side-by-side testing, we reached a clear conclusion: ChatGPT 5.1 isn’t ready to handle the majority of our technical workloads.

Several developers and power users have observed the same thing. While 5.1 shows promise in conversational style, its practical performance, especially in structured tasks, often falls short of ChatGPT 4o, 4.1, or Claude Sonnet 4.5. Let’s unpack why that’s happening and what to use instead.

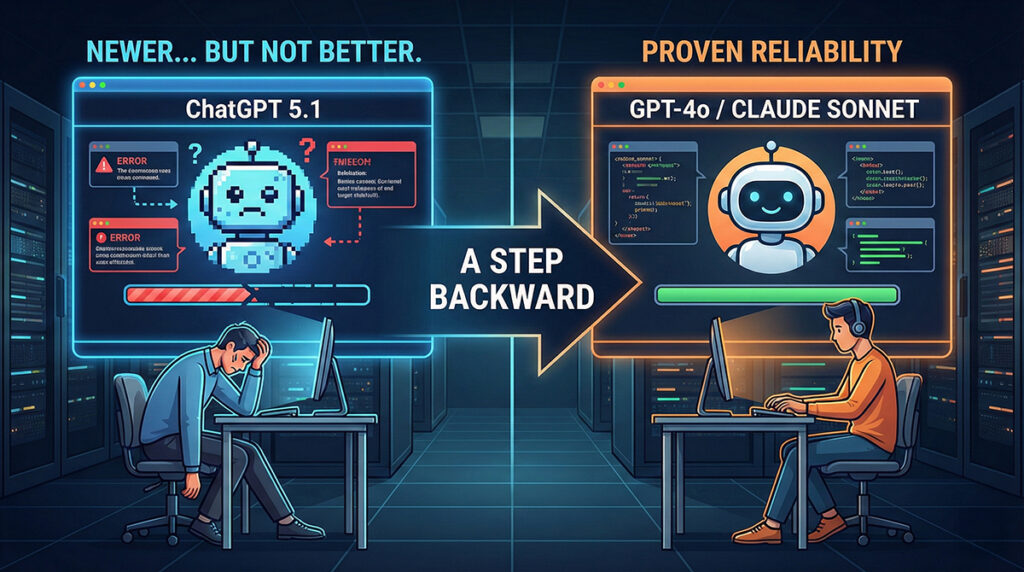

When “Newer” Doesn’t Mean “Better”

OpenAI positioned ChatGPT 5.1 as a more natural communicator. However, many users report that the model tends to second-guess itself, lose context mid-conversation, or introduce uncertainty in straightforward responses. According to Reddit discussions from November 2025, several users considered it a downgrade in flow compared to GPT-4.1.

In our internal testing, ChatGPT 5.1 often required double the prompts to reach the same accuracy that ChatGPT 4o achieved faster and with fewer hallucinations. For research or troubleshooting, that’s a real productivity hit.

Mixed Reception and Power User Frustration

Facebook groups and developer forums show divided reactions. Some users appreciate 5.1’s smoother tone in casual chat, while others report inconsistent factual performance and strange detours during problem-solving tasks. One post summarized it perfectly: “It sounds smarter but knows less.”

This pattern explains why technical operators and AI professionals are gravitating toward Claude Sonnet 4.5 or Grok for complex data retrieval. These models tend to maintain logical consistency longer and support deeper research sequences. Grok, in particular, has gained recognition for its real-time data query strength, something OpenAI’s models have yet to fully match.

Behind the Confusion: Model Strategy and User Perception

Consumers often assume that higher version numbers equal better results. Yet, with ChatGPT, versioning doesn’t always reflect linear improvement. Industry discussions on Hacker News (November 2025) suggest that GPT-5.1 might have been tuned more for conversational efficiency than technical precision. Some even suspect its behavior could align with commercial strategies such as faster token consumption—though that’s speculative and unconfirmed.

The issue is transparency. OpenAI rarely announces specific limitations. Users end up discovering them through real-world testing rather than official documentation, creating a trust and expectation gap that power users must bridge themselves.

What We’re Doing

For now, we’re leaning on ChatGPT 4o and Claude Sonnet 4.5 for research-heavy or technical workflows. Grok remains our top choice for live data retrieval. Interestingly, OpenAI’s smaller 8B and 10B variants are surprisingly capable, offering better consistency for short, factual interactions.

If your team depends on accuracy, reliability, or technical depth, consider sticking with 4-series models or testing Claude before standardizing on 5.1. Upgrades should save time, not add confusion. At some point, we expect 5.x to mature into a stronger platform—but until then, experience shows it’s wise to stay with what actually works.